torchfilter.base

Base classes for filtering.

Package Contents

Classes

Base class for a generic differentiable dynamics model, with additive white |

|

Base class for a generic differentiable state estimator. |

|

Base class for a generic Kalman-style filter. Parameterizes beliefs with a mean |

|

Helper class that provides a standard way to create an ABC using |

|

Observation model base class for a generic differentiable particle |

|

Helper class for creating a particle filter measurement model (states, |

|

Virtual sensor base class for our differentiable Kalman filters. |

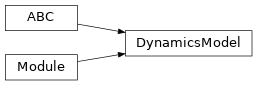

- class torchfilter.base.DynamicsModel(*, state_dim: int)[source]

Bases:

torch.nn.Module,abc.ABC

Base class for a generic differentiable dynamics model, with additive white Gaussian noise.

Subclasses should override either

forwardorforward_loopfor computing dynamics estimates.- state_dim

Dimensionality of our state.

- Type:

int

- forward(self, *, initial_states: types.StatesTorch, controls: types.ControlsTorch) Tuple[types.StatesTorch, types.ScaleTrilTorch][source]

Dynamics model forward pass, single timestep.

Computes both predicted states and uncertainties. Note that uncertainties correspond to the (Cholesky decompositions of the) “Q” matrices in a standard linear dynamical system w/ additive white Gaussian noise. In other words, they should be lower triangular and not accumulate – the uncertainty at at time

tshould be computed as if the estimate at timet - 1is a ground-truth input.By default, this is implemented by bootstrapping the

forward_loop()method.- Parameters:

initial_states (torch.Tensor) – Initial states of our system. Shape should be

(N, state_dim).controls (dict or torch.Tensor) – Control inputs. Should be either a dict of tensors or tensor of size

(N, ...).

- Returns:

Tuple[torch.Tensor, torch.Tensor] – Predicted states & uncertainties. States should have shape

(N, state_dim), and uncertainties should be lower triangular with shape(N, state_dim, state_dim).

- forward_loop(self, *, initial_states: types.StatesTorch, controls: types.ControlsTorch) Tuple[types.StatesTorch, torch.Tensor][source]

Dynamics model forward pass, over sequence length

Tand batch sizeN. By default, this is implemented by iteratively callingforward().Computes both predicted states and uncertainties. Note that uncertainties correspond to the (Cholesky decompositions of the) “Q” matrices in a standard linear dynamical system w/ additive white Gaussian noise. In other words, they should be lower triangular and not accumulate – the uncertainty at at time

tshould be computed as if the estimate at timet - 1is a ground-truth input.To inject code between timesteps (for example, to inspect hidden state), use

register_forward_hook().- Parameters:

initial_states (torch.Tensor) – Initial states to pass to our dynamics model. Shape should be

(N, state_dim).controls (dict or torch.Tensor) – Control inputs. Should be either a dict of tensors or tensor of size

(T, N, ...).

- Returns:

Tuple[torch.Tensor, torch.Tensor] – Predicted states & uncertainties. States should have shape

(T, N, state_dim), and uncertainties should be lower triangular with shape(T, N, state_dim, state_dim).

- jacobian(self, initial_states: types.StatesTorch, controls: types.ControlsTorch) torch.Tensor[source]

Returns Jacobian of the dynamics model.

- Parameters:

states (torch.Tensor) – Current state, size

(N, state_dim).controls (dict or torch.Tensor) – Control inputs. Should be either a dict of tensors or tensor of size

(N, ...).

- Returns:

torch.Tensor – Jacobian, size

(N, state_dim, state_dim)

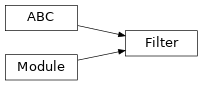

- class torchfilter.base.Filter(*, state_dim: int)[source]

Bases:

torch.nn.Module,abc.ABC

Base class for a generic differentiable state estimator.

As a minimum, subclasses should override:

initialize_beliefsfor populating the initial belief of our estimatorforwardorforward_loopfor computing state predictions

- state_dim

Dimensionality of our state.

- Type:

int

- abstract initialize_beliefs(self, *, mean: types.StatesTorch, covariance: types.CovarianceTorch) None[source]

Initialize our filter with a Gaussian prior.

- Parameters:

mean (torch.Tensor) – Mean of belief. Shape should be

(N, state_dim).covariance (torch.Tensor) – Covariance of belief. Shape should be

(N, state_dim, state_dim).

- forward(self, *, observations: types.ObservationsTorch, controls: types.ControlsTorch) types.StatesTorch[source]

Filtering forward pass, over a single timestep.

By default, this is implemented by bootstrapping the

forward_loop()method.- Parameters:

observations (dict or torch.Tensor) – Observation inputs. Should be either a dict of tensors or tensor of size

(N, ...).controls (dict or torch.Tensor) – Control inputs. Should be either a dict of tensors or tensor of size

(N, ...).

- Returns:

torch.Tensor – Predicted state for each batch element. Shape should be

(N, state_dim).

- forward_loop(self, *, observations: types.ObservationsTorch, controls: types.ControlsTorch) types.StatesTorch[source]

Filtering forward pass, over sequence length

Tand batch sizeN. By default, this is implemented by iteratively callingforward().To inject code between timesteps (for example, to inspect hidden state), use

register_forward_hook().- Parameters:

observations (dict or torch.Tensor) – observation inputs. Should be either a dict of tensors or tensor of size

(T, N, ...).controls (dict or torch.Tensor) – control inputs. Should be either a dict of tensors or tensor of size

(T, N, ...).

- Returns:

torch.Tensor – Predicted states at each timestep. Shape should be

(T, N, state_dim).

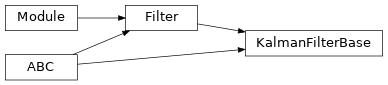

- class torchfilter.base.KalmanFilterBase(*, dynamics_model: DynamicsModel, measurement_model: KalmanFilterMeasurementModel, **unused_kwargs)[source]

Bases:

torchfilter.base.Filter,abc.ABC

Base class for a generic Kalman-style filter. Parameterizes beliefs with a mean and covariance.

Subclasses should override

_predict_step()and_update_step().- dynamics_model

Forward model.

- measurement_model

Measurement model.

- forward(self, *, observations: types.ObservationsTorch, controls: types.ControlsTorch) types.StatesTorch[source]

Kalman filter forward pass, single timestep.

- Parameters:

observations (dict or torch.Tensor) – Observation inputs. Should be either a dict of tensors or tensor of shape

(N, ...).controls (dict or torch.Tensor) – Control inputs. Should be either a dict of tensors or tensor of shape

(N, ...).

- Returns:

torch.Tensor – Predicted state for each batch element. Shape should be

(N, state_dim).

- initialize_beliefs(self, *, mean: types.StatesTorch, covariance: types.CovarianceTorch) None[source]

Set filter belief to a given mean and covariance.

- Parameters:

mean (torch.Tensor) – Mean of belief. Shape should be

(N, state_dim).covariance (torch.Tensor) – Covariance of belief. Shape should be

(N, state_dim, state_dim).

- class torchfilter.base.KalmanFilterMeasurementModel(*, state_dim, observation_dim)[source]

Bases:

abc.ABC,torch.nn.Module

Helper class that provides a standard way to create an ABC using inheritance.

- state_dim

State dimensionality.

- Type:

int

- observation_dim

Observation dimensionality.

- Type:

int

- abstract forward(self, *, states: types.StatesTorch) Tuple[types.ObservationsNoDictTorch, types.ScaleTrilTorch][source]

Observation model forward pass, over batch size

N.- Parameters:

states (torch.Tensor) – States to pass to our observation model. Shape should be

(N, state_dim).- Returns:

Tuple[torch.Tensor, torch.Tensor] – tuple containing expected observations and cholesky decomposition of covariance. Shape should be

(N, M).

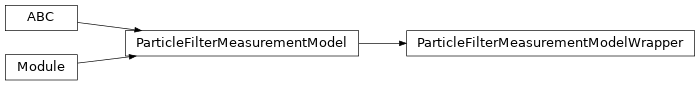

- class torchfilter.base.ParticleFilterMeasurementModel(state_dim: int)[source]

Bases:

abc.ABC,torch.nn.Module

Observation model base class for a generic differentiable particle filter; maps (state, observation) pairs to the log-likelihood of the observation given the state ( \(\log p(z | x)\) ).

- state_dim

Dimensionality of our state.

- Type:

int

- abstract forward(self, *, states: types.StatesTorch, observations: types.ObservationsTorch) torch.Tensor[source]

Observation model forward pass, over batch size

N. For each member of a batch, we expectMseparate states (particles) and one unique observation.- Parameters:

states (torch.Tensor) – States to pass to our observation model. Shape should be

(N, M, state_dim).observations (dict or torch.Tensor) – Measurement inputs. Should be either a dict of tensors or tensor of size

(N, ...).

- Returns:

torch.Tensor – Log-likelihoods of each state, conditioned on a corresponding observation. Shape should be

(N, M).

- class torchfilter.base.ParticleFilterMeasurementModelWrapper(kalman_filter_measurement_model: KalmanFilterMeasurementModel)[source]

Bases:

torchfilter.base.ParticleFilterMeasurementModel

Helper class for creating a particle filter measurement model (states, observations -> log-likelihoods) from a Kalman filter one (states -> observations).

- Parameters:

kalman_filter_measurement_model (torchfilter.base.KalmanFilterMeasurementModel) – Kalman filter measurement model instance to wrap.

- forward(self, *, states: types.StatesTorch, observations: types.ObservationsTorch) torch.Tensor[source]

Observation model forward pass, over batch size

N. For each member of a batch, we expectMseparate states (particles) and one unique observation.- Parameters:

states (torch.Tensor) – States to pass to our observation model. Shape should be

(N, M, state_dim).observations (torch.Tensor) – Measurement inputs. Should be either a dict of tensors or tensor of size

(N, ...).

- Returns:

torch.Tensor – Log-likelihoods of each state, conditioned on a corresponding observation. Shape should be

(N, M).

- class torchfilter.base.VirtualSensorModel(state_dim: int)[source]

Bases:

abc.ABC,torch.nn.Module

Virtual sensor base class for our differentiable Kalman filters.

Maps each observation input to a predicted state and uncertainty, in the style of BackpropKF. This is often necessary for complex observation spaces like images or point clouds, where it’s not possible to learn a standard state->observation measurement model.

- state_dim

Dimensionality of our state.

- Type:

int

- abstract forward(self, *, observations: types.ObservationsTorch) Tuple[types.StatesTorch, types.ScaleTrilTorch][source]

Predicts states and uncertainties from observation inputs.

Uncertainties should be lower-triangular Cholesky decompositions of covariance matrices.

- Parameters:

observations (dict or torch.Tensor) – Measurement inputs. Should be either a dict of tensors or tensor of size

(N, ...).- Returns:

Tuple[torch.Tensor, torch.Tensor] – Predicted states & uncertainties. States should have shape

(N, state_dim), and uncertainties should be lower triangular with shape(N, state_dim, state_dim).